Domokos Sármány, Production Services, ECMWF Forecast Department and Miloš Lompar, Department of Numerical Weather Prediction (NWP), Serbian Met Service

Miloš Lompar, a Member-state employee, swapped his seat in the Department of Numerical Weather Prediction of the Serbian Met Service for a seat in the Production Services Team (Development Section) at ECMWF for a nine-month secondment. Miloš worked particularly closely with Domokos Sármány, who led ECMWF’s participation in the Maestro project.

Here Domokos and Miloš reflect on the progress and success this collaboration has brought.

How much forecast data can NWP produce?

Quite a lot, but never enough. ECMWF’s Integrated Forecasting System (IFS) currently produces 120 tebibytes (TiB) of operational forecast data per day. But the need for increased model resolution for the sake of ever more accurate weather forecasts means this figure is dwarfed by the amount of data future forecasts will produce. This poses a technological challenge.

Numerical weather prediction (NWP) has always been an early adopter of technological innovation in the field of high-performance computing (HPC) and deploys some of the best-performing supercomputers in the world – the latest example of which is ECMWF’s upcoming ATOS supercomputer (Figure 1). However, the technical challenges facing scientists have changed over the years.

For decades, the sheer rate and associated computational cost of performing floating-point operations (flops) were the primary concern. This has resulted in a software stack and a set of programming models that are optimised for floating-point computation but treat data handling as a second-class citizen. Accessing sufficient data in memory and, more recently, throughput to storage have become significant bottlenecks in the past two decades – a phenomenon often referred to as the input/output (I/O) performance gap.

Figure 1: The Centre’s next supercomputer will be made up of four Atos BullSequana XH2000 clusters and is planned to become operational in 2022. (Image: Atos)

70% of operational forecast data is consumed by the Centre’s product generation engine (PGen) to create the final products for dissemination to Member and Co-operating States and commercial customers.

Product generation is a highly I/O intensive process. In a time-critical window of one hour, the forecast output is continuously being written to disk while PGen is reading and processing the data as it becomes available. The resulting I/O congestion is one of the major bottlenecks in the Centre’s current operational system. With the planned increases in model resolution to 5 km, or even 1 km, in the coming years, there is a clear need to explore new avenues in the field of data movement.

Towards a new middleware for data movement – the Maestro project

ECMWF collaborated with leading users and providers of HPC technologies in the Maestro project. It is the project’s goal to introduce novel technology, called Maestro middleware, for efficient data movement across complex scientific workflows.

The project considered five different scientific applications, one of which was NWP with testing through its IFS benchmark. ECMWF’s participation formed part of the ECMWF Scalability Programme – helping to ensure ECMWF can exploit the full potential of future computing architectures and laying the foundation for ever higher-resolution forecasts.

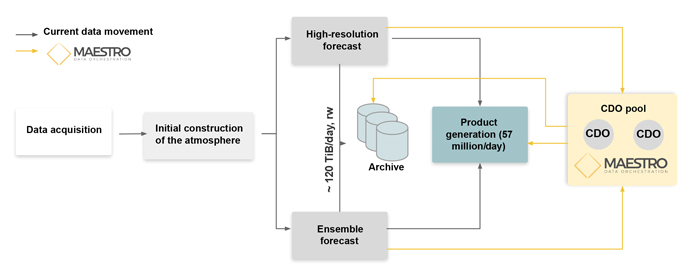

Figure 2 shows a potential use of the Maestro middleware in the ECMWF operational workflow.

Figure 2: Potential structure of the ECMWF operational workflow using the Maestro middleware. A Core Data Object (CDO) represents a fundamental unit of data, such as a single two-dimensional global field.

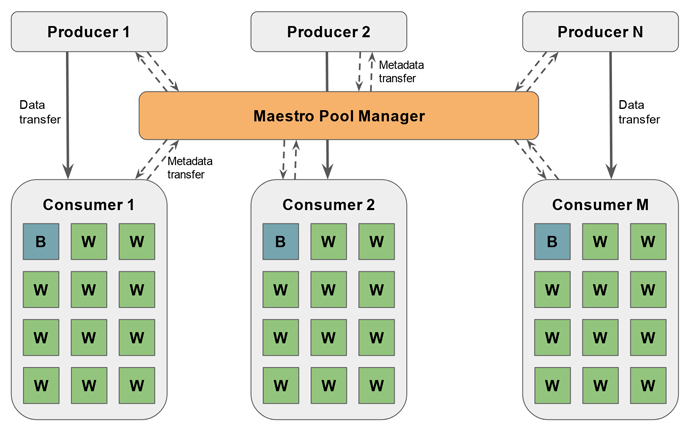

We have adapted one of ECMWF’s I/O benchmarks (simplified workflows to measure I/O performance) to mimic passing data from the forecast model to PGen via the Maestro middleware. Figure 3 sketches a high-level design of this solution. A key component is the Maestro pool manager, which orchestrates data movement between the data producers and data consumers. In ECMWF’s real workflows, the producers would be the high-resolution and ensemble forecasts generating model output data. The consumers would be instances of PGen processing the forecast data into products for our Member and Co-operating States and commercial customers. Each consumer is implemented as a multithreaded program with a broker responding to data availability notifications from the pool manager and multiple workers carrying out the postprocessing work.

Figure 3: An I/O benchmark adapted to the Maestro middleware. A pool manager (PM) orchestrates the data flow between multiple producers generating a mock weather forecast and multiple consumers running product generation. All metadata communication is executed via the PM while the data transfer occurs directly between the producer and the consumer. Each consumer is implemented as a multithreaded program with one broker (B) and multiple workers (W).

Crucially, the design shown in Figure 3 decouples the metadata communications from the data transfer and avoids writing either to disk. Data transfer between nodes of the supercomputer is particularly fast thanks to the middleware’s use of remote direct memory access (RDMA). It is a mechanism that allows two nodes of a supercomputer to access each other’s memory without involving the processor, cache or operating system of either. As a result, only the output products generated by PGen are written to disk.

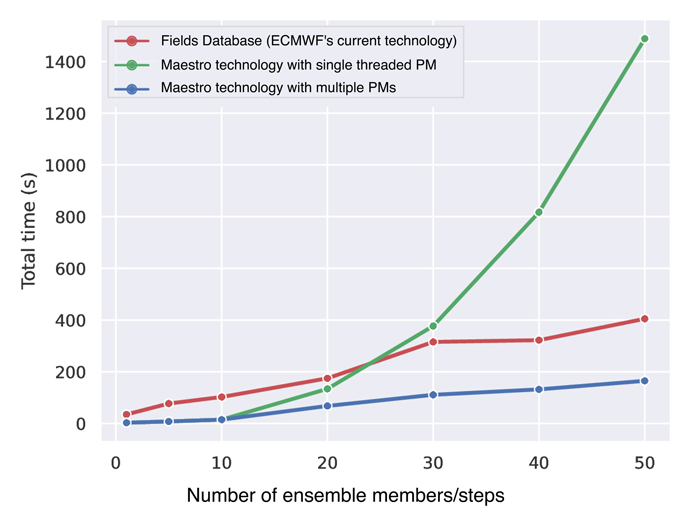

Figure 4: Total workflow execution times for different numbers of ensemble members and forecast steps: current technology (ECMWF’s Fields Database (FDB)) (red); the Maestro technology with a single-threaded pool manager (PM) (green); the Maestro technology with multiple single-threaded pool managers (PMs) as a way to simulate the multithreaded pool manager (blue).

Figure 4 shows the benchmarking results up to a configuration of 50 producers and 50 consumers. Two conclusions may be drawn from the results. First, the role of metadata communications is vital as that is what slows down the benchmark when a single pool manager is applied (green line in Figure 4). Second, significant (up to 2.5 times) speedup may be achieved with this innovative technology, provided we apply enough pool managers (blue line compared with red and green in Figure 4).

The Maestro technology is still at an early stage of development, but our prototype implementation shows a potential pathway towards a technology that may alleviate one of the major obstacles to creating more accurate global operational forecast data.

Secondment successes

A secondment provides an opportunity for much deeper knowledge transfer and wider-ranging collaboration between ECMWF and its Member and Co-operating States, to complement ECMWF’s training sessions, workshops and liaison visits. In this case the benefits were even greater.

The successful completion of the Maestro project would have been impossible without the opportunity for Miloš to join ECMWF on a nine-month secondment. Miloš implemented the final version of the benchmark adapted to the new Maestro middleware. He had to get his hands dirty with many of the major Production Services products, including MultIO, PGen, FDB and MIR (Meteorological Interpolation and Regridding software). He has gained extensive knowledge of the programming practices and development environments and processes used at ECMWF.

The secondment was an excellent opportunity not only to strengthen ties with colleagues in the Production Services Team, but also to establish working relationships with other major players in the HPC landscape: Jülich, CEA, Appentra, ETH Zürich, CSCS, Seagate and HPE (formerly Cray).

Acknowledgements

The MAESTRO project has received funding from the European Union’s Horizon 2020 research and innovation program through grant agreement 801101.

Top banner credit: agsandrew / iStock / Getty Images Plus