Global reanalyses provide the most complete picture currently possible of past weather and climate, because they make use of all available observations combined with the best short-range output from the most recent forecasting systems. Here we describe a new set of software libraries, called MultIO, that has been developed so that ECMWF’s future ORAS6 ocean reanalysis can feed efficiently into its next atmospheric reanalysis, ERA6.

MultIO

Reanalyses are widely used in climate-assessment studies and, more recently, in training weather-forecasting models based on machine learning. ECMWF has a long history of producing both atmospheric and ocean reanalyses, with the latest atmospheric reanalysis being the fifth-generation iteration, called ERA5, and the latest ocean reanalysis being ORAS5. It is now preparing for the next generation of reanalysis, ERA6, which will also include ocean data from the next-generation ocean reanalysis, ORAS6.

As a result, it was imperative that the ocean model would be able to archive data in ECMWF’s archival system, MARS, and that it would be able to do so efficiently. In an early evaluation, we deemed the existing output library used by recent versions of the NEMO ocean model, XIOS, insufficient for this purpose because of its tight coupling to the netCDF data format. Instead, we have developed MultIO, a set of software libraries that offer two related, but distinct functionalities:

- An asynchronous I/O‑server to decouple data output from model computations.

- User-programmable processing pipelines that operate on model output directly, accessing data while they are still in memory, and thus reducing the storage I/O operations that are required for some of the most frequent post-processing tasks.

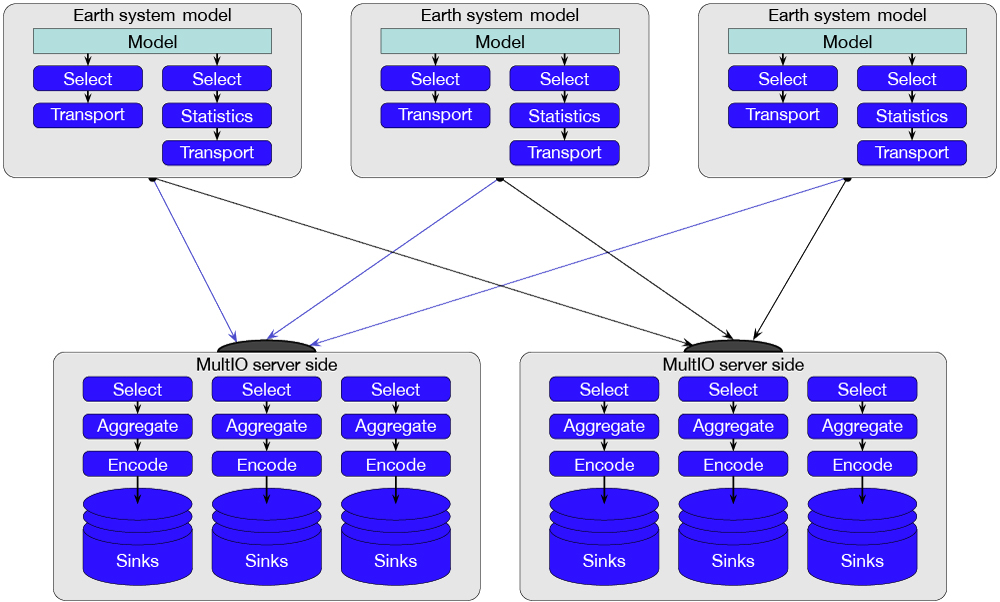

MultIO is a metadata-driven, message-based system. This means that the I/O-server and processing pipelines fundamentally handle and operate on discrete packets of data, self-described with attached metadata, called messages. The metadata attached to each message drive the behaviour of the I/O‑server, the data-routing decisions, and the selection of actions. The type and volume of post-processing may be controlled by setting the message metadata via the Fortran/C/Python APIs, and by configuring a processing pipeline of actions. The first figure shows an overview of the pipelining and I/O‑server design.

MultIO's roles in achieving the aims of ORAS6 are three‑fold:

- Aggregating horizontal global fields from distributed data.

- Computing temporal means from data at time-step regularity.

- Injecting the metadata information required for encoding all necessary fields in a GRIB2 format approved by the World Meteorological Organization (WMO), and encoding the data by calling the ‘ecCodes’ library.

The data governance and curation process has also been a crucial and independent part of the overall work. The process has resulted in around 90 new ocean parameters, approved by the WMO.

Pre-production evaluation

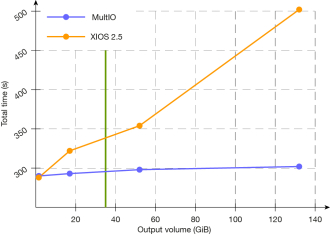

MultIO will enter production as the engine for model output for ORAS6 in early 2024. This will, in turn, feed into the ERA6 production runs. The nature of ocean reanalysis is that many small or medium-sized data assimilation trajectories are executed on the high-performance computing system, rather than a few large runs. Although the actual details for production are still to be decided, the following is expected to be close to the final configuration: quarter-degree (eORCA025) ocean grids; thousands of five-day assimilation loops; 11 ensemble members; 300 compute tasks per member; 20 I/O tasks per member. With this topology, a single loop with one ensemble member takes around five minutes, but this may vary with output volume.

The MultIO vs XIOS graph shows the execution times of a single assimilation trajectory with increasing throughput. The likely production output volume is shown by the green vertical line. The execution time increases gently as data is output using MultIO, making simulation runs feasible even at throughput far exceeding what is planned for production. By comparison, we include the equivalent timings when data is output using XIOS, a popular choice among NEMO users. Although performance would still be acceptable in the likely production configuration, the cost would quickly become prohibitive for higher output volumes. This suggests that XIOS would be unsuitable for time-critical runs of coupled models.

Outlook

MultIO is currently able to output ocean parameters required for production in GRIB2 format. However, if research experiments require a wider range of parameters, there is an option to output netCDF format via XIOS, or even output both GRIB2 and netCDF simultaneously using both MultIO and XIOS. In the future MultIO is likely to be extended to support netCDF output directly, as part of an international collaboration. Work is also ongoing to replace the IFS’s own Fortran I/O‑server with MultIO for all model output.