Numerical weather prediction models face an increasingly diverse landscape of supercomputing architectures. The ongoing, overarching trend is the shift from purely CPU‑based platforms to heterogeneous systems with GPU accelerators. The resulting boost in computing cores and memory bandwidth offers significant potential for attaining higher numerical resolution and energy efficiency. However, challenges arise because model programming and implementation depends on the specific hardware, and efficient execution requires targeted optimisation. Serving various hardware inevitably involves more complex code that needs to be organised to maintain productivity.

To achieve this for ECMWF’s non-hydrostatic FVM dynamical core, which was previously implemented in Fortran, we are rewriting and further developing FVM with the domain-specific library GT4Py of the Swiss National Supercomputing Centre (CSCS) and ETH Zurich. Both CSCS and ETH Zurich are also working closely with MeteoSwiss in a related project that is porting the ICON model to GPUs using GT4Py. ECMWF benefits greatly from the leading software development efforts by these partners and the PASC-(Platform for Advanced Scientific Computing)-funded KILOS project at ETH Zurich. At ECMWF, the GT4Py efforts occur in addition to the ongoing GPU adaptation of the operational Integrated Forecasting System (IFS) model.

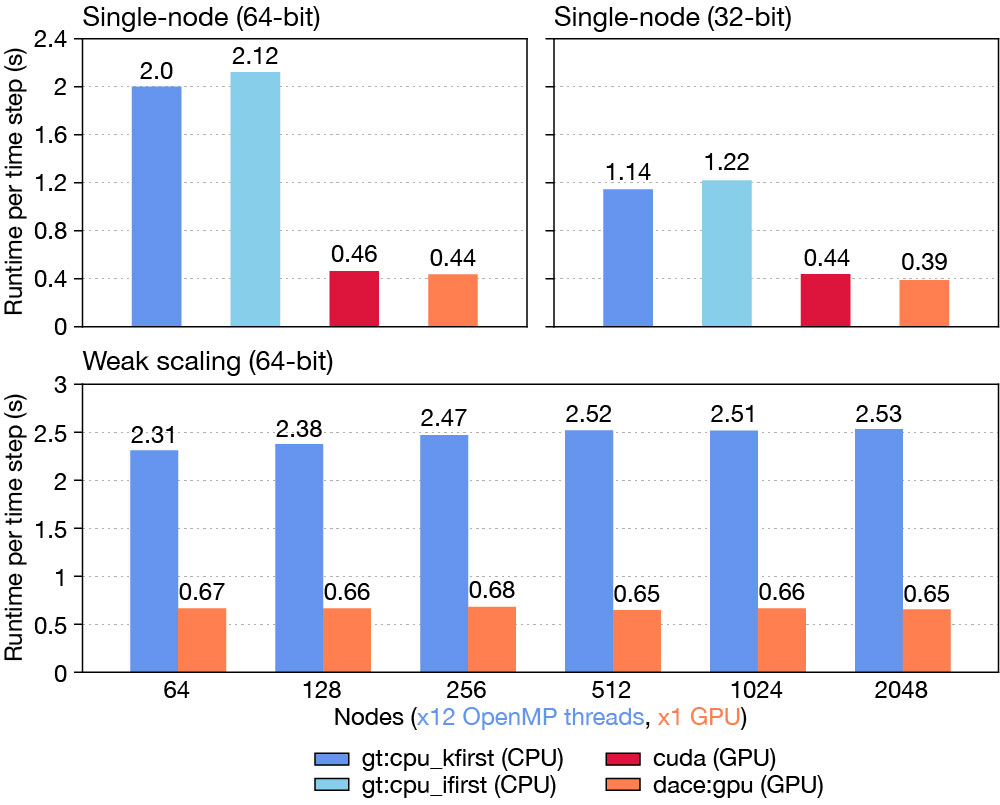

GT4Py systematically separates the user code from hardware architecture dependent optimisations, instead of handling all in one source code, which is common in traditional designs. The user code contains the definitions of the computational stencils (e.g. discrete operators of the spatial discretisation, advection schemes, time integration) that are expressed using the embedded GT4Py domain-specific language (DSL). An optimising toolchain in GT4Py then transforms this high-level representation into a finely tuned implementation for the target hardware architecture (see the presentation of results in the first figure).

Productivity can be maintained with the GT4Py approach because the common high-level ‘driver’ interface is completely agnostic to target hardware, thus allowing support for optimisations and new architectures without changing the application. Furthermore, Python represents a popular and advanced programming language with concise syntax, comprehensive documentation, an extensive set of libraries (e.g. for unit testing, data analysis, machine learning, visualisation), a low barrier of entry for domain scientists and academia, and relatively straightforward prototyping. Python can also integrate seamlessly with lower-level languages such as C++ and Fortran, making it a good fit for DSLs.

Preliminary results

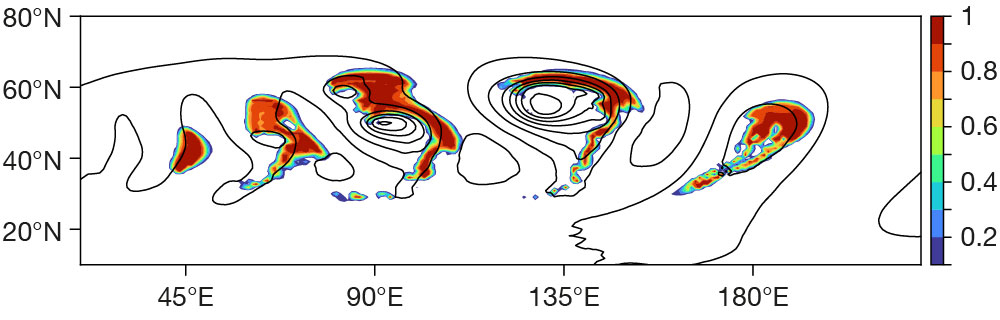

We present results from an intermediate stage of the FVM porting effort, using the first version of GT4Py restricted to structured grids. We consider the FVM dynamical core coupled to the IFS prognostic cloud microphysics scheme, implemented entirely in Python using GT4Py. For the performance measures shown in the first figure, we performed short runs of the moist baroclinic wave benchmark exemplified in the second figure.

Once ported to GT4Py, FVM can employ various user-selectable backends for CPU and GPU hardware, using either 64‑bit or 32‑bit floating point precision. Two CPU C++ backends specialised for different array layouts were tested. The two GPU backends tested can execute with either native CUDA C++ or by leveraging the data-centric (DaCe) framework of ETH’s Scalable Parallel Computing Laboratory. The first figure’s bottom panel shows parallel scaling of the distributed model using the GHEX library (funded by the Partnership for Advanced Computing in Europe, PRACE) across multiple CPU or GPU nodes. Weak scaling is considered from about 14 km down to 1.7 km grid spacing and corresponding increases in compute nodes from 64 to 2,048, respectively (grid spacings are given for the equatorial region, finer spacings apply away from the equator due to the regular longitude-latitude grid that is employed temporarily). Near-optimal scaling (i.e. the same runtime) is achieved in the given range, somewhat better with GPUs. Development and study are continued on other platforms with different hardware.

Outlook

The preliminary results presented indicate the potential of the GT4Py approach to achieve performance portability and productivity in the context of future numerical weather prediction models. This is also supported by the latest results with the ICON model (a first GT4Py-enabled dynamical core on GPUs already shows equal performance compared to the Fortran & OpenACC version) and an earlier GT4Py porting project for the US FV3 model at the Allen Institute for AI.

The porting and development of FVM is ongoing with the next version of GT4Py, which is equipped with a new declarative interface and supports unstructured meshes required for the global configuration with the quasi-uniform IFS octahedral grid. The declarative GT4Py introduces important new features for more modular and concise programming in the user code and enables GT4Py to optimise more freely and aggressively.

Updates with FVM based on the new declarative GT4Py are expected in 2023 and 2024. From mid‑2023, ECMWF will host a computer scientist position for GT4Py, which is funded by the EU ESiWACE3 project.